In today’s dynamic market, understanding consumer behavior and optimizing marketing campaigns is crucial for success. A/B testing emerges as a powerful tool that allows marketers to make data-driven decisions, minimizing risks and maximizing the return on investment (ROI). By systematically testing different variations of marketing elements, businesses gain invaluable insights into what resonates most effectively with their target audience. This article explores some of the top A/B testing ideas that marketers can implement to improve their campaigns across various channels, including email marketing, website optimization, and paid advertising. Embracing A/B testing is no longer optional; it is a necessity for staying competitive and achieving measurable growth in the digital age. For marketers seeking to refine their strategies and unlock their full potential, A/B testing offers a path towards continuous improvement and data-backed success.

From subtle changes in call-to-action buttons to comprehensive redesigns of landing pages, the possibilities for A/B testing are extensive. This article provides a curated selection of practical and impactful A/B testing ideas, categorized by marketing channel for easy navigation and implementation. Whether you are a seasoned marketer looking for fresh inspiration or just beginning your journey with A/B testing, this compilation offers a valuable resource to guide your experimentation and drive significant improvements in your marketing performance. By understanding the principles of A/B testing and applying these targeted strategies, marketers can effectively enhance engagement, conversion rates, and ultimately, the bottom line.

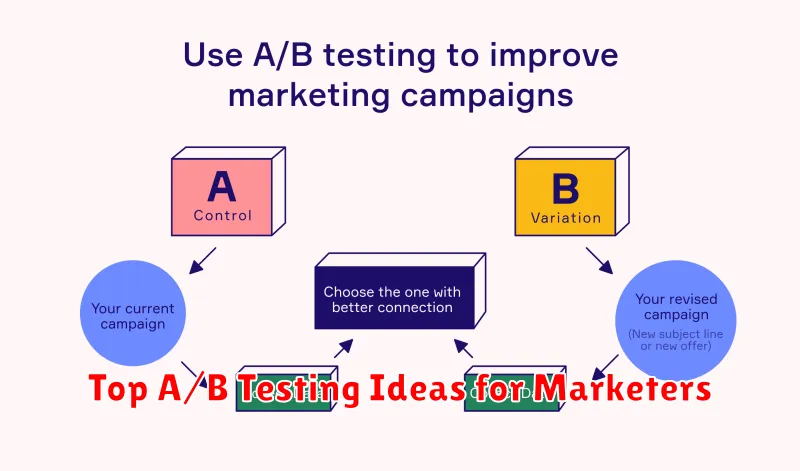

Why A/B Testing Matters

In today’s competitive market, understanding consumer behavior is paramount. A/B testing provides a data-driven approach to optimizing marketing campaigns. It allows marketers to make informed decisions based on actual user interactions, rather than relying on assumptions.

By testing different variations of marketing elements, businesses can identify what resonates most effectively with their target audience. This leads to improved conversion rates, increased engagement, and ultimately, a higher return on investment (ROI).

A/B testing eliminates guesswork and allows for continuous improvement. By constantly testing and iterating, marketers can refine their strategies and stay ahead of the curve.

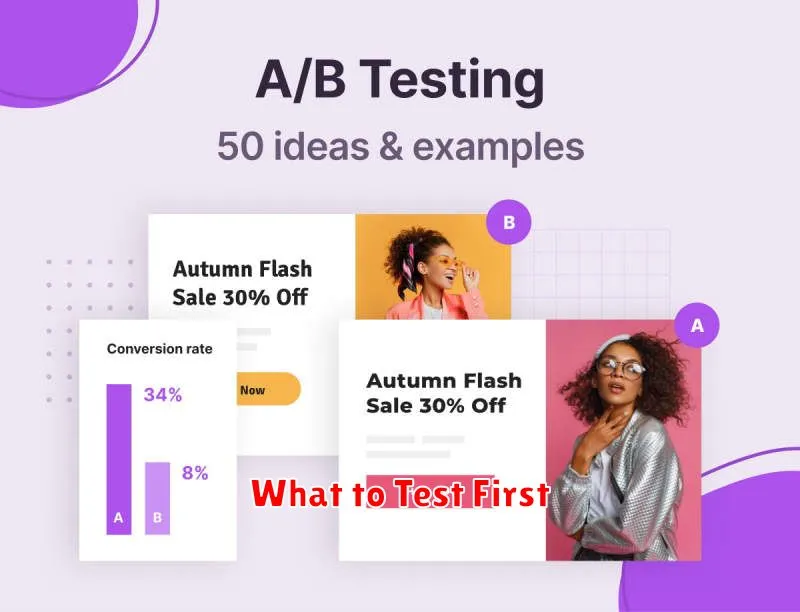

What to Test First

Prioritizing A/B tests is crucial for maximizing impact. Start by focusing on elements with the highest potential for improvement and alignment with your overall marketing goals. High-impact areas often include those directly tied to conversions or engagement.

Consider testing elements within your sales funnel where drop-off rates are high. For example, if cart abandonment is a significant issue, experimenting with different checkout processes could yield substantial gains.

Low-hanging fruit tests, such as headline variations or call-to-action button colors, are quick wins that can inform future, more complex tests. Don’t underestimate the power of small changes, as they can often lead to significant improvements in key metrics.

Running Tests the Right Way

Implementing A/B tests effectively is crucial for obtaining meaningful results. Planning is paramount. Define clear objectives for your test. What are you trying to achieve? Increased conversion rates? Higher click-through rates? Clearly stating your goals will guide your test design and analysis.

Sample size matters significantly. Ensure you have a large enough sample size to achieve statistically significant results. A small sample can lead to misleading conclusions. Utilize online calculators to determine the appropriate sample size based on your desired confidence level and effect size.

Duration of the test is also critical. Running a test for too short a period might not capture variations due to day-of-week effects or other cyclical patterns. Conversely, running a test for too long can introduce bias as user behavior and market conditions change.

Analysis of your A/B test data should be thorough. Don’t simply look at the top-level metrics. Dive deeper to understand why a variation performed better or worse. Segment your data to identify trends within specific user groups.

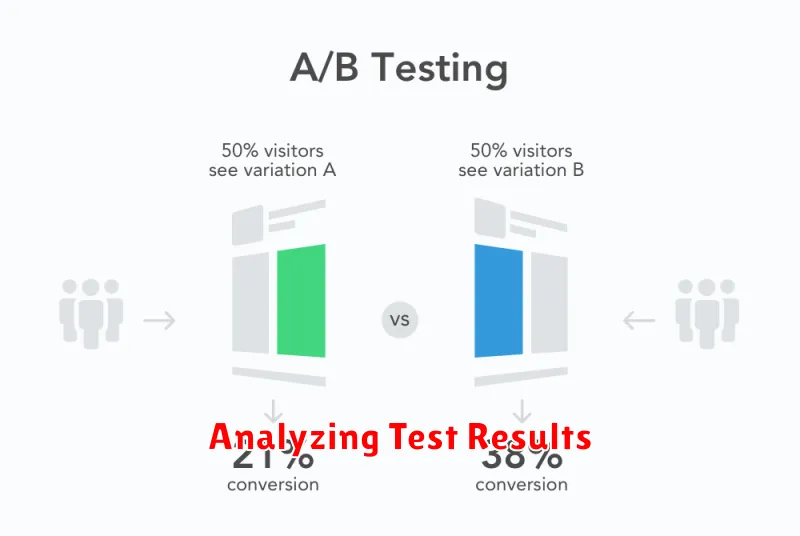

Analyzing Test Results

After running your A/B test for a sufficient duration to gather statistically significant data, the next crucial step is analyzing the results. This involves more than simply seeing which variation “won.” Thorough analysis provides insights into user behavior and informs future optimization efforts.

Begin by examining the key metrics you defined at the start of your test. This could include conversion rates, click-through rates, bounce rates, or average order value, depending on your goals. Observe the difference in these metrics between your control and variations. Is the difference statistically significant? A small improvement might not be meaningful if it isn’t statistically sound.

Segmentation can reveal valuable insights. Analyzing results based on user demographics, device type, or acquisition source can uncover unexpected trends. For instance, one variation might perform well on mobile devices while another resonates with desktop users. This information can be used to further personalize the user experience.

Documenting Learnings

Thorough documentation is crucial for successful A/B testing programs. Recording the entire process, from hypothesis to results, allows for informed future decisions and prevents repeating past mistakes. This creates a knowledge base for your team.

Key aspects to document include the initial hypothesis, the specific variations tested, the target audience, the duration of the test, and the key metrics used for evaluation. Clearly record the final results, both statistically significant and insignificant, and any qualitative observations made during the testing period.

Organizing this information in a centralized location, such as a shared spreadsheet or dedicated testing platform, ensures accessibility and facilitates analysis across multiple tests.

Using Insights to Scale

A/B testing provides valuable insights that can be used to optimize and scale marketing campaigns. Analyzing the results of A/B tests goes beyond simply identifying the “winner.” It’s crucial to understand why a particular variation performed better.

By examining the data, you can identify key elements that resonate with your target audience. These insights can be applied to future campaigns, creative assets, and even inform broader marketing strategies. For example, if a variation with a concise headline performs significantly better, this suggests a preference for brevity among your audience. This learning can be applied to future campaigns beyond the initial A/B test.

Scaling successful A/B testing results involves implementing the winning variation across broader marketing efforts. This might include applying the winning design elements to similar campaigns or adapting the successful messaging to other channels.